Startups are under constant pressure to ship products that are simple, affordable, and scalable without compromising performance or user trust. For years, AI systems have relied heavily on cloud infrastructure to meet these demands.

That approach works, but it comes with real trade-offs: rising compute costs, latency, and growing concerns around data privacy.

Increasingly, machine learning workloads are moving away from centralized clouds and closer to where data is generated, on devices themselves. This shift toward edge computing is accelerating and reshaping how startups design and launch their MVPs.

What is Edge AI and why does it matter for start-ups?

Edge AI refers to running machine learning models directly on devices such as smartphones, sensors, gateways, or edge servers, rather than sending data to a remote cloud for processing. (Shi et al., 2016).

Processing data locally enables low-latency, real-time decision-making while reducing dependence on continuous network connectivity. It also lowers cloud compute and data transfer costs significantly.

Recent advances in specialized hardware have made this approach viable much earlier than expected. Platforms like Apple’s Neural Engine, Google’s Edge TPU, and modern microcontrollers now provide enough on-device compute to support practical inference at scale (Zhang et al., 2021).

For startups, this matters because speed and cost discipline are non-negotiable. Cloud-based AI pipelines can become expensive quickly, especially as usage grows.

Edge AI removes much of that overhead, allowing teams to deliver intelligent features without taking on a heavy infrastructure bill.

Key advantages of Edge AI for MVPs

- Reduced costs and lower burn rate

Running inference on-device eliminates recurring cloud compute costs for many use cases. For early-stage startups, where runway and margins are tight, this can have a meaningful impact on sustainability (Sarker, 2020).

- Enhanced privacy and security

User expectations around privacy have shifted, particularly in regions governed by GDPR and CCPA. Processing data locally reduces exposure to compliance risk and builds trust, especially in sensitive sectors like health or finance.

In an environment where users are wary of large cloud providers, local processing can become a competitive advantage rather than just a technical detail (Sicari et al., 2015).

- Real-time performance

Applications such as personalized recommendations, health monitoring, and predictive systems rely on fast response times. Cloud-based inference introduces unavoidable latency due to network round trips.

Edge AI avoids that bottleneck, enabling near real-time decision-making and a smoother user experience, something users notice immediately even if they cannot articulate why (Zhang et al., 2021).

Why now? The strategic timing for Edge AI adoption

The timing for edge-first MVPs is especially favorable. Consumer hardware is advancing rapidly, and AI acceleration is now a core feature rather than a niche capability.

Apple, Qualcomm, and Intel have all introduced dedicated neural processing units in recent product roadmaps, designed to support fast, energy-efficient on-device inference and lower the barrier to edge deployment.

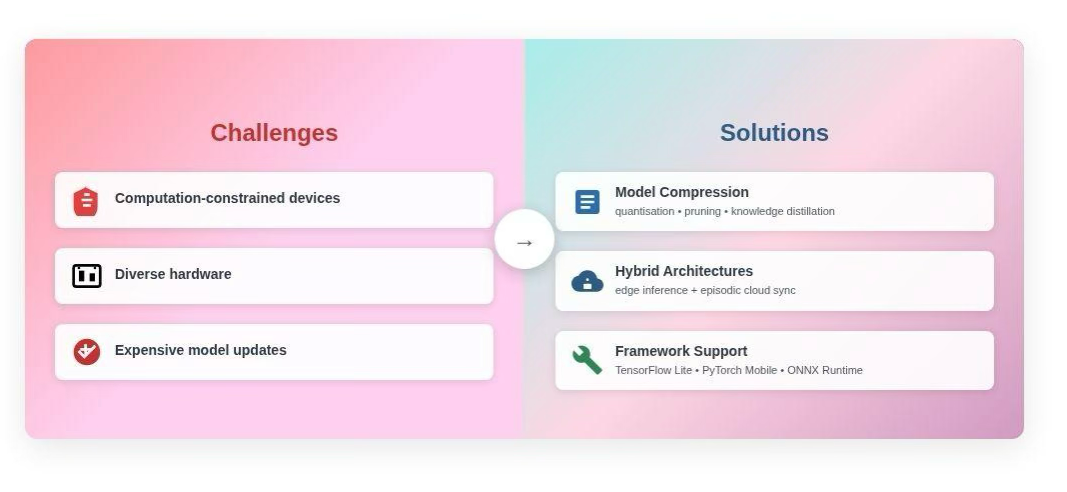

At the same time, development tooling has matured. Frameworks such as TensorFlow Lite, PyTorch Mobile, and ONNX Runtime reduce friction in deploying and maintaining models across diverse devices.

What once required highly specialized teams can now be managed by focused startup engineering groups, which matches the profile of early-stage companies.

Challenges and how start-ups can overcome them

Edge AI does come with constraints. Limited compute capacity, device fragmentation, and model update complexity are common concerns. However, these challenges are increasingly well understood and manageable.

Model compression techniques, such as quantization, pruning, and knowledge distillation, allow models to run efficiently on constrained hardware without unacceptable accuracy loss.

Hybrid architectures, combining on-device inference with periodic cloud synchronization, provide a practical balance between performance and flexibility.

When applied thoughtfully, these approaches allow startups to benefit from edge deployment without accumulating long-term technical debt.

Edge AI and the new MVP playbook

Traditional MVPs often prioritized speed to market through cloud-based services. While fast to deploy, those systems could be expensive to scale and vulnerable to latency or connectivity issues. Edge AI changes that equation.

By shifting inference onto the device, startups can build MVPs that:

- Operate reliably in low-connectivity environments, opening access to underserved markets

- Protect user data by default, strengthening trust in regulated sectors

- Deliver the responsiveness required for AR/VR, robotics, and wearable technologies

For example, a remote patient monitoring system deployed in rural areas can trigger alerts instantly when anomalous vital signs are detected, even without reliable internet access.

Agricultural sensors far from central infrastructure can optimize irrigation in real time. These are practical advantages available today, not speculative scenarios.

Looking ahead: The Edge as the new cloud

Industry forecasts point to continued decentralization of data processing. Gartner predicts that by 2025, 75% of enterprise-generated data will be processed outside traditional centralized data centers (Gartner, 2021). This represents a structural shift in how intelligent systems are delivered, not just a marginal optimization.

For startups, the takeaway is clear: Edge AI is not only a cost-saving measure; it is a strategic design choice. Teams that adopt edge-first architectures early can differentiate on performance, privacy, and user experience, factors that increasingly determine whether an MVP gains traction or stalls.

As hardware improves and development tools become more accessible, edge computing is likely to become the default foundation for intelligent products rather than the exception.