Elon Musk’s artificial intelligence company, xAI, is about to launch the early beta version of Grokipedia, a new project to rival Wikipedia.

Grokipedia has been described by Musk as a response to what he views as the “political and ideological bias” of Wikipedia. He has promised that it will provide more accurate and context-rich information by using xAI’s chatbot, Grok, to generate and verify content.

Is he right? The question of whether Wikipedia is biased has been debated since its creation in 2001.

Wikipedia’s content is written and maintained by volunteers who can only cite material that already exists in other published sources, since the platform prohibits original research. This rule, which is designed to ensure that facts can be verified, means that Wikipedia’s coverage inevitably reflects the biases of the media, academia and other institutions it draws from.

This is not limited to political bias. For example, research has repeatedly shown a significant gender imbalance among editors, with around 80%–90% identifying as male in the English-language version.

Because most of the secondary sources used by editors are also historically authored by men, Wikipedia tends to reflect a narrower view of the world, a repository of men’s knowledge rather than a balanced record of human knowledge.

The volunteer problem

Bias on collaborative platforms often emerges from who participates rather than top-down policies. Voluntary participation introduces what social scientists call self-selection bias: people who choose to contribute tend to share similar motivations, values and often political leanings.

Just as Wikipedia depends on such voluntary participation, so does, for example, Community Notes, the fact-checking feature on Musk’s X (formerly Twitter). An analyses of Community Notes, which I conducted with colleagues, shows that its most frequently cited external source – after X itself – is actually Wikipedia.

Other sources commonly used by note authors mainly cluster toward centrist or left-leaning outlets. They even use the same list of approved sources as Wikipedia – the crux of Musk’s criticism against the open online encyclopedia. Yet no-one calls out Musk for this bias.

Tada Images

Wikipedia at least remains one of the few large-scale platforms that openly acknowledges and documents its limitations. Neutrality is enshrined as one of its five foundational principles. Bias exists, but so does an infrastructure designed to make that bias visible and correctable.

Articles often include multiple perspectives, document controversies, even dedicate sections to conspiracy theories such as those surrounding the September 11 attacks. Disagreements are visible through edit histories and talk pages, and contested claims are marked with warnings. The platform is imperfect but self-correcting, and it is built on pluralism and open debate.

Is AI unbiased?

If Wikipedia reflects the biases of its human editors and their sources, AI has the same problem with the biases of its data.

Large language models (LLMs) such as those used by xAI’s Grok are trained on enormous datasets collected from the internet, including social media, books, news articles and Wikipedia itself. Studies have shown that LLMs reproduce existing gender, political and racial biases found in their training data.

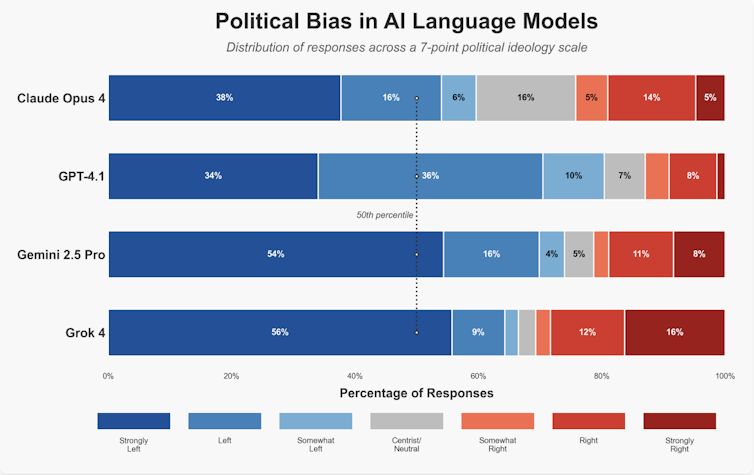

Musk has claimed that Grok is designed to counter such distortions, but Grok itself has been accused of bias. One study in which each of four leading LLMs were asked 2,500 questions about politics showed that Grok is more politically neutral than its rivals, but still actually has a left of centre bias (the others lean further left).

MIchael D’Angelo/Promptfoo, CC BY-SA

If the model behind Grokipedia relies on the same data and algorithms, it is difficult to see how an AI-driven encyclopedia could avoid reproducing the very biases that Musk attributes to Wikipedia.

Worse, LLMs could exacerbate the problem. They operate probabilistically, predicting the most likely next word or phrase based on statistical patterns rather than deliberation among humans. The result is what researchers call an illusion of consensus: an authoritative-sounding answer that hides the uncertainty or diversity of opinions behind it.

As a result, LLMs tend to homogenise political diversity and favour majority viewpoints over minority ones. Such systems risk turning collective knowledge into a smooth but shallow narrative. When bias is hidden beneath polished prose, readers may no longer even recognise that alternative perspectives exist.

Baby/bathwater

Having said all that, AI can still strengthen a project like Wikipedia. AI tools already help the platform to detect vandalism, suggest citations and identify inconsistencies in articles. Recent research highlights how automation can improve accuracy if used transparently and under human supervision.

AI could also help transfer knowledge across different language editions and bring the community of editors closer. Properly implemented, it could make Wikipedia more inclusive, efficient and responsive without compromising its human-centered ethos.

Michaelangeloop

Just as Wikipedia can learn from AI, the X platform could learn from Wikipedia’s model of consensus building. Community Notes allows users to submit and rate notes on posts, but its design limits direct discussion among contributors.

Another research project I was involved in showed that deliberation-based systems inspired by Wikipedia’s talk pages improve accuracy and trust among participants, even when the deliberation happens between humans and AI. Encouraging dialogue rather than the current simple up or down-voting could make Community Notes more transparent, pluralistic and resilient against political polarisation.

Profit and motivation

A deeper difference between Wikipedia and Grokipedia lies in their purpose and perhaps business model. Wikipedia is run by the non-profit Wikimedia Foundation, and the majority of its volunteers are motivated mainly by public interest. In contrast, xAI, X and Grokipedia are commercial ventures.

Although profit motives are not inherently unethical, they can distort incentives. When X began selling its blue check verification, credibility became a commodity rather than a marker of trust. If knowledge is monetised in similar ways, the bias may increase, shaped by what generates engagement and revenue.

True progress lies not in abandoning human collaboration but in improving it. Those who perceive bias in Wikipedia, including Musk himself, could make a greater contribution by encouraging editors from diverse political, cultural and demographic backgrounds to participate – or by joining the effort personally to improve existing articles. In an age increasingly shaped by misinformation, transparency, diversity and open debate are still our best tools for approaching truth.

![]()

Taha Yasseri receives funding from Research Ireland and Workday.