Conversational AI systems are big in healthcare, passing medical licensing exams and generating diagnostic plans in simulated clinical conversations. However, a critical gap exists between AI capabilities and real-world deployment. Medical tasks like gathering patient information, providing diagnoses, and crafting treatment plans are safety-critical activities that require oversight by licensed professionals.

Healthcare already has established frameworks for this kind of oversight. Experienced physicians routinely supervise nurse practitioners (NPs) and physician assistants (PAs), providing considerable autonomy while maintaining accountability for patient care decisions. This existing model inspired researchers at Google DeepMind to develop a new paradigm for AI medical consultations.

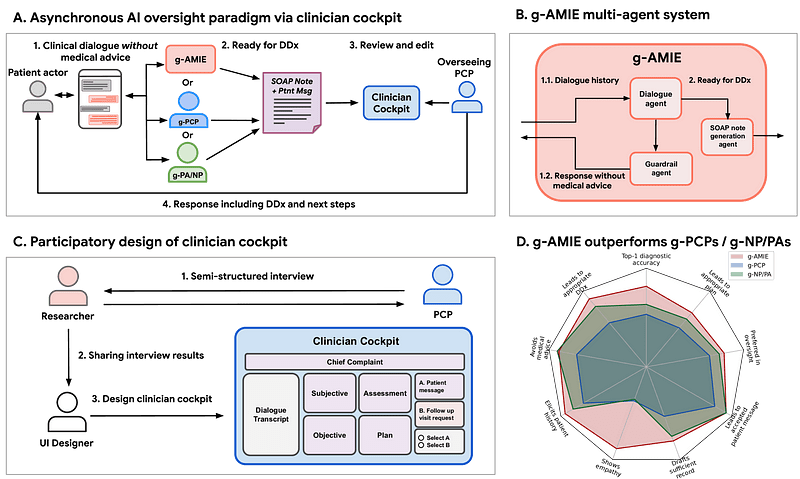

The solution introduces asynchronous oversight — a framework where AI systems can conduct comprehensive patient interviews but must defer all individualized medical advice to licensed physicians. This approach, built around the Articulate Medical Intelligence Explorer (AMIE), creates a clear separation between information gathering and medical decision-making.

Designing Asynchronous Oversight for Medical AI

The asynchronous oversight framework operates on a fundamental principle: separate history-taking from medical advice delivery by requiring human oversight between these phases. This separation addresses the core challenge of maintaining AI capabilities while ensuring physician accountability.

The system defines individualized medical advice as two specific categories: diagnoses (providing specific medical conditions tailored to individual situations) and management recommendations (suggesting treatments, medications, lifestyle changes, tests, or referrals). By strictly prohibiting these during AI consultations, the framework creates a clear boundary for autonomous operation.